Migrate Data from MongoDB to PostgreSQL

ETL (Extract, Transform, Load)

ETL (Extract, Transform, Load) is a fundamental process in databases and data warehousing used to move and process data from one system to another. It consists of three main steps:

- Extract – Retrieve data from multiple sources (e.g., databases, APIs, files).

- Transform – Clean, filter, and modify the data to fit the target system’s structure and requirements.

- Load – Store the transformed data into the target database or data warehouse.

ETL is widely used for data migration, integration, and analytics. For example, moving data from MongoDB to PostgreSQL is ETL process that ensures structured storage and efficient querying.

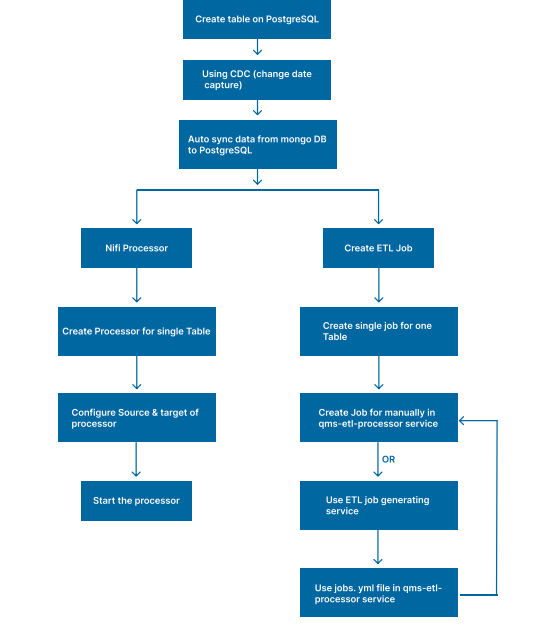

Change Data Capture(CDC)

The ETL processor supports real-time Change Data Capture (CDC) to keep data synchronized between MongoDB and PostgreSQL With CDC:

- Watchers monitor MongoDB collections for real-time changes.

- Changes (inserts, updates, replacements, and deletes) are automatically captured and replicated to PostgreSQL.

- Each ETL job can have its own CDC configuration, ensuring flexibility.

- CDC complements batch ETL, providing both real-time and scheduled data synchronization.

Methods for Implementing CDC

1) Apache NiFi

Apache NiFi is a powerful data flow automation tool designed for ETL processes and real-time data streaming. Originally developed by the NSA and later open-sourced by the Apache Software Foundation, NiFi offers:

- An intuitive drag-and-drop interface for building complex data pipelines.

- Support for multiple data sources and destinations, making integration seamless.

- Real-time data ingestion and transformation, ideal for high-volume workloads.

2) Custom Jobs

Custom ETL Jobs automate data transfer between different databases, ensuring seamless integration. These jobs:

- Migrate data from MongoDB to PostgreSQL on a scheduled basis (e.g., daily).

- Define source and target databases to structure data efficiently.

- Process data incrementally based on a specific field (e.g.,

creationDate). - Handle data in batches (e.g., 500 records at a time for optimal performance).

- Leverage CDC to track and update changes, ensuring data consistency with minimal manual intervention.